Supercharge Your Development with PixLab Tools & APIs

Getting Started with PixLab Tools & APIs

Harness AI-powered tools for vision, automation, and media processing. Seamlessly integrate advanced APIs to enhance your apps visual capabilities, streamline workflows, and empower developers, businesses, and creators.

Empowering Innovation for Thousands of Developers and Businesses

Join a global community of developers and creatives leveraging PixLab’s AI-powered Tools, Models & APIs → to automate workflows, enhance creativity, and achieve exceptional results effortlessly.

API requests ↗ served each month

Service uptime guarantee

API Endpoints and growing...

Bring Vision to Your Apps

Discover PixLab's Powerful Tools & APIs

Access to over 150 API Endpoints →, dozens of AI-driven tools & APIs → crafted to revolutionize creativity, development, and automation. Whether you're a developer or a designer, PixLab's versatile applications unlock limitless potential to boost productivity and drive innovation.

API Portal

The PixLab API Portal is your gateway to explore and work with cutting-edge APIs. It guides developers toward the best API choices within a robust suite of APIs for vision and data extraction tasks, including:

- ID Scan & Extract API - Automate ID scanning and extraction using your favorite programming language with PixLab's ID Scan & Extract API.

- Vision Platform - Integrate with advanced Vision Language Model (vLM) tools and APIs for text analysis, document parsing, chunking, and data extraction.

- Vision, Media Analysis & Processing API Endpoints - Analyze, classify, and enhance images effortlessly.

- Rich PDF Generation API - Create dynamic, high-quality PDFs for your projects.

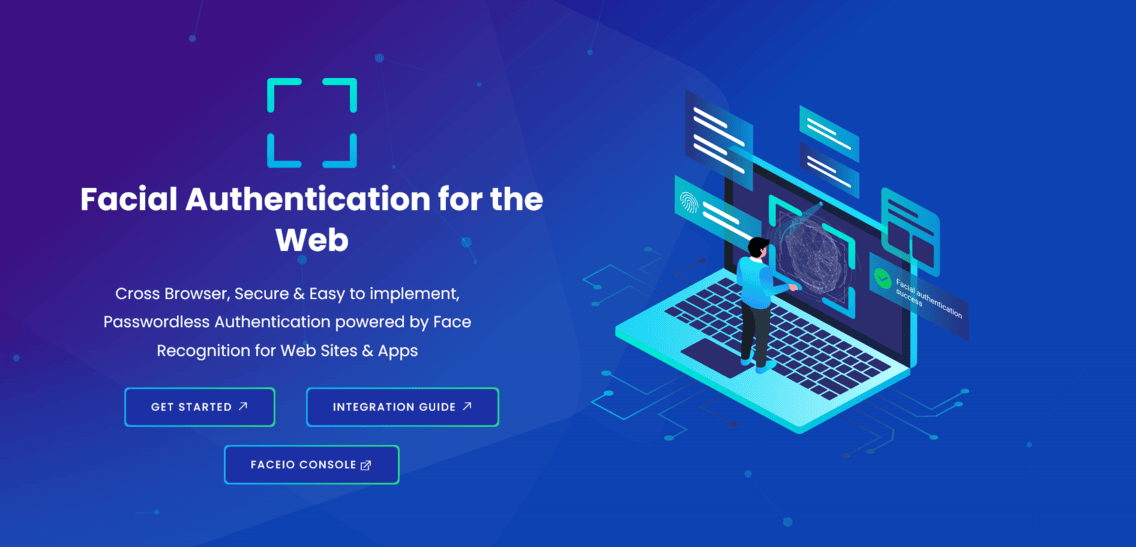

- FACEIO - Facial Authentication for the Web ↗ - A cross-platform Passwordless Authentication SDK for Websites & Apps.

Public API Endpoints

Access a powerful suite of tools powered by PixLab's Machine Vision & Media Processing APIs. Seamlessly integrate 150+ API endpoints to enhance automation, media analysis, and content generation. Explore detailed guides, code samples, and unlock capabilities such as:

- Explore Documentation for 150+ Intelligent API Endpoints →

- Vision & Media Analysis - Gain insights from your video & images with advanced vision models.

- Image Processing - Edit and enhance images effortlessly.

- Smart Media Converter - Convert over 239 file formats securely and quickly, without data transfer.

- Pixelation & Content Moderation - Automatically filter sensitive content.

- Capture & Transform API - Extract, capture, and transform images and video frames.

- Background Removal - Remove backgrounds in seconds.

- Face Authentication & Recognition - Enable secure, Passwordless login across all browsers using face recognition.

Document Intelligence & Extraction API

Streamline ID scanning & extraction with PixLab's ID Scan & Extract API. Easily recognize and process various document types, including ID Cards, Passports, and Driver's Licenses, supporting over 11,000 document types from 197+ countries. Achieve accurate ID scanning and JSON-parsed data extraction for seamless ID extraction with PixLab's developer-friendly API platform.

Media-Rich PDF Generation API

Easily create dynamic PDFs and media-rich templates with PixLab's Rich Rich-PDF Generation APIs. Customize content effortlessly, streamline workflows, and produce professional designs for invoices, marketing materials, and more.

FACEIO - Passwordless Facial Authentication for Web & Apps

FACEIO ↗ by PixLab offers a secure, cross-browser facial recognition SDK for websites and web apps. Easily integrate passwordless authentication to manage sign-ins, user access, and attendance with unmatched simplicity and speed.

- Facial Authentication: Fast, secure login using facial recognition—no passwords or OTPs required.

- Attendance Tracking & Access Control: Monitor check-ins and manage secure access to systems or locations using facial identity—no punch cards or keycards needed.

- Passwordless Login: Enable secure, cross-browser authentication using facial recognition ↗.

- Age Verification: Instantly verify user age with high accuracy for compliance and content gating.

- Liveness Detection: Prevent spoofing and fraud through real-time analysis of facial movements.

Upgrade your security and user experience with FACEIO ↗ — the next-gen authentication layer for the web.

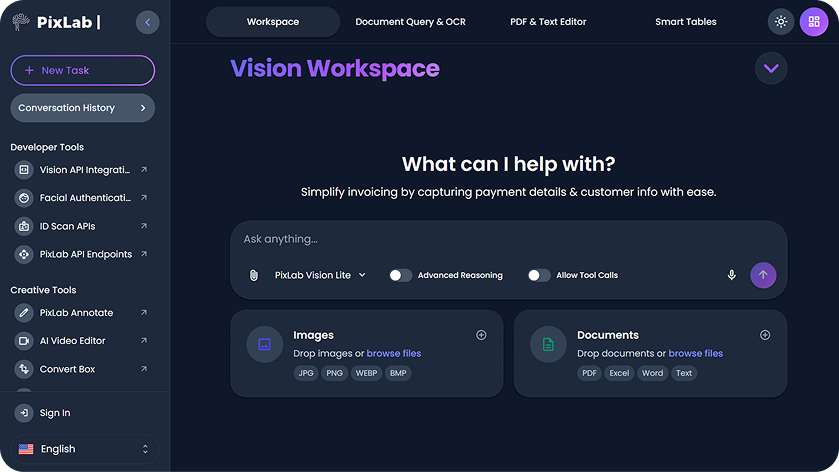

Vision Platform: Workspace, Agents, RAG, OCR, LLM Parse Tools & APIs

Accelerate development with PixLab's Vision Language APIs for text analysis, RAG, document parsing, and structured data extraction. Leverage Vision LLMs, OCR, AI Agents, and no-code automation via the Vision Workspace ↗. Easily integrate through PixLab's API Endpoints to streamline workflows and boost productivity.

Extract insights from PDFs, images, and Excel files. Automate invoice processing, accounting, and document parsing with the Vision Platform API. Use the interactive Workspace to chat with documents and extract data instantly.

Creativity Tools

Convert your media files online with PixLab Convert Box ↗. It supports over 239 media formats, including images, documents, audio, and videos. Conversion is secure, fast, and requires no data transfer. Learn more about Convert Box →

Create stunning UIs and reusable code for your mobile apps. Streamline app development with PixLab App UI/UX →

Generate production-ready UI code ↗ for popular frameworks like SwiftUI, Flutter, React Native, and Jetpack Compose. Effortlessly design polished, high-performance user interfaces tailored to your needs.

Creator & Developer Toolbox

Generate Clips & edit videos online. Instantly remove backgrounds from multiple images with PixLab's BG-REMOVE API Endpoint → or the bulk removal online app ↗, streamlining batch processing with precision and ease. Enhance your visuals with the Creative Toolbox ↗, perfect for crafting eye-catching designs, social media posts, and professional presentations.

- AI Video Editor - Generate Clips & Edit videos online right in your browser. Trim, cut, merge, captions, effects, and export to MP4/WebM. Powered by WebAssembly, WebGL/WebGPU, PixLab VLM APIs.

- Batch Background Removal: Quickly remove backgrounds ↗ from multiple images in one go.

- Creative Editing Tools: Craft professional visuals with user-friendly online design tools ↗.

- Enhanced Productivity: Save time and effort with streamlined workflows.

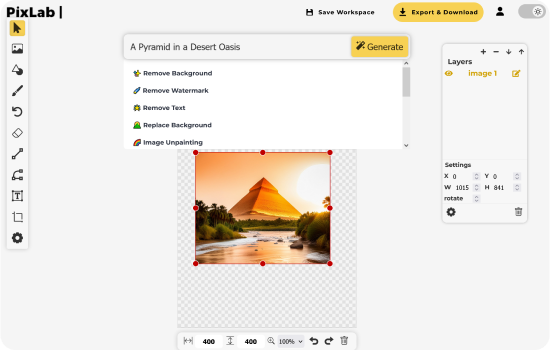

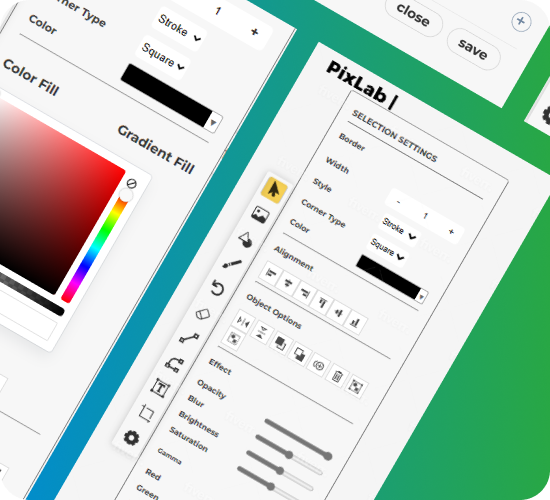

AI Photo Editor

PixLab AI Photo Editor → is your go-to free online tool for editing, designing logos, creating generative art, and making social media graphics. Effortlessly edit, enhance, and create stunning visuals using smart tools designed for all your creative needs.

- AI Photo Editor ↗ ensures professional, consistent results tailored to your unique style.

- Quickly remove unwanted elements → like people, objects, or backgrounds with just one click.

- Use the Freehand Drawing tool ↗ for annotations, sketches, or personal touches with customizable brushes and smooth control.

Online Creativity Tools

Access a suite of powerful web tools and applications powered by PixLab's APIs. From bulk image editing and AI-driven design to document automation and UI code generation, streamline your workflow with cutting-edge solutions designed for developers, designers, and businesses.

- AI Video Editor - Create and edit videos online directly in your browser. Trim, cut, merge, add captions and effects, and export to MP4/WebM. Utilizing WebAssembly, WebGL/WebGPU, and PixLab VLM APIs. Try Now ↗

- Annotate - Efficiently label, segment, and annotate images online at scale for your machine learning training data—all within your browser ↗.

- Bulk Background Removal Tool - Remove backgrounds from multiple images in batches instantly. All powered by the BG-REMOVE API Endpoint →.

- Convert Box - Online file and media converter. Supports over 239 formats, including images, documents, audio, and videos.

- AI Photo Editor - A full-featured online image editor with professional tools.

- Vision Workspace ↗ - OCR, AI-assisted writing, smart spreadsheets, document chat, and productivity tools in one place.

- App UI/UX - Generate stunning UI for mobile apps in SwiftUI, React Native, Jetpack Compose, and Flutter.

- Screenshot Editor - Edit screenshots online with advanced filters and effects.

- Creative Toolbox - Design assets, edit screenshots, generate mockups, and create social media content.

- 2D Tilemap Maker - A free tool for designing 2D game tilemaps and levels.

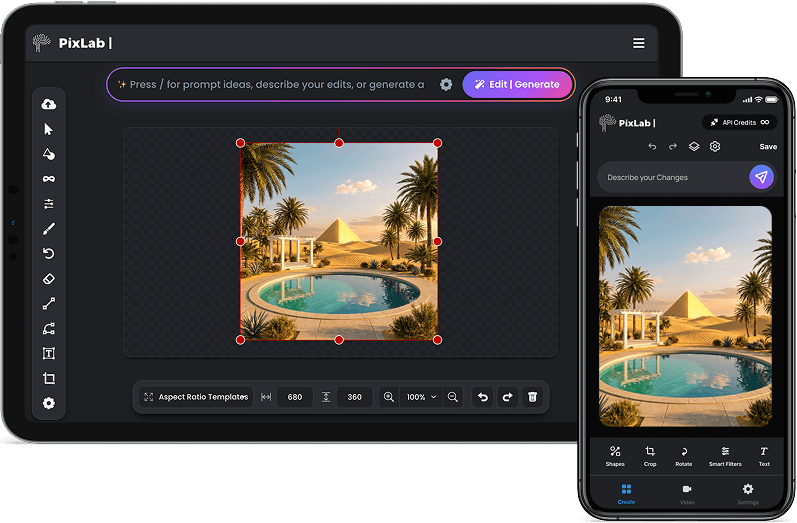

PixLab iOS Photo Editor - AI-Powered Mobile Editing

Transform your iPhone and iPad into a powerful AI-powered photo editor. The PixLab iOS Photo Editor → brings prompt-based editing, professional tools, and seamless cloud sync to your mobile device. Generate stunning images from text prompts, edit with precision, and create videos on the go.

- Prompt-Based Editing: Edit photos by typing what you want—no complex tools or layers needed. Simply describe your vision and let AI do the work.

- Professional Tools: Access advanced layer support, color grading, retouching tools, and blend modes designed for touch.

- AI Style Generator: Transform images with curated presets like Cinematic, Anime, or Oil Painting styles.

- Cloud Sync: Start on iPhone, finish on iPad or editor.pixlab.io ↗. Your projects sync instantly across all devices.

- Text-to-Video: Generate short clips or animate static images with advanced AI video generation.

- Universal Export: Export in any format from transparent PNGs to 4K MP4s with custom aspect ratios for social media.

Boost Productivity and Automation

Transform Your Workflow with Intelligent Automation

Leverage AI-powered Tools & APIs → to streamline processes, boost collaboration, and maximize productivity. Automate tasks seamlessly and focus on what truly matters.

Machine Vision & Media Analysis

Leverage cutting-edge AI models, tools & APIs to analyze, classify, and enhance images with unparalleled accuracy.

Automate Media Processing

Programmatically automate image enhancements, background removal, tensor library with AI-driven precision.

Boost Productivity

Streamline workflows, reduce manual effort, and accelerate production with PixLab Vision Platform → & ID Scan & Extract API →.

FAQ

Got Questions About PixLab

Find clear and concise answers to the most common questions.

How do I get started with PixLab?

Initiating projects with PixLab is straightforward. Select the appropriate tool or API, and refer to the following resources to begin:

- Explore a variety of public APIs covering image analysis, vision-language models, ID scanning, facial recognition, PDF generation, and more in the API Portal.

- Browse the API Endpoints for a full list of PixLab API Endpoints.

- Check the REST API Guide on how to make your first API call using your favorite Programming Language.

- Generate your API Key in the PixLab Console ↗.

- For identity verification, visit the ID Scan Platform.

- For document chunking, textual data parsing & extraction, LLM tool calling, and an OpenAI compatible API, rely on the Vision Platform, and its accompanying Workspace App ↗.

- Finally, refer to the list of online tools below such as the AI Photo Editor, Bulk Background Removal, App UI/UX Coder, and Creative Toolbox.

Where are my output assets stored?

Your processed media assets are stored securely. If you've linked your own AWS S3 bucket via the PixLab Console ↗, your assets will be stored exclusively in your S3 bucket instead of PixLab's public storage. This approach offers greater control, security, and compliance, a preferred choice for businesses handling sensitive data.

Who can benefit from PixLab Vision?

PixLab Vision Platform → and the Workspace App ↗are designed for:

- Professionals & Businesses: Automate office workflows, extract data, and enhance productivity.

- Developers & Teams: Process large-scale documents, integrate OCR, and build advanced applications.

- Creatives & Analysts: Edit text, manage spreadsheets, and extract insights effortlessly.

Explore the Vision Workspace ↗ to see how PixLab Vision can fit your specific needs.

Can I upgrade my current plan?

You can upgrade your current plan to accommodate your expanding requirements through theUpgrade your current plan to accommodate your growing needs directly from the console. PixLab Console ↗.

Can I downgrade my current plan?

Downgrading your current plan is possible. To downgrade, please submit a subscription management ticket via the support tab in the PixLab Console ↗.

What happens if I exceed my monthly quota?

If you exceed your monthly quota, additional requests are billed at a low rate of $0.009 per 1,000 requests. However, media processing requests are always free for Bsiness &Enterprise plan customers. You'll receive an email alert when you reach 90% of your monthly quota to help you manage usage effectively. For full details, visit the Pricing page.

Do you offer SLA's (Service Level Agreements)?

Service Level Agreements (SLAs) are not included in standard plans. However, enterprise-level clients and those with critical business needs may request a customized 99.9% SLA uptime guarantee with PixLab | Symisc Systems ↗. Refer to the PixLab Console ↗ for additional information.

How can I get support if I need integration assistance?

If you need assistance, PixLab offers multiple support options:

- Developer Docs: Check our API Endpoints → for technical guidance.

- PixLab Console: Manage your API keys, track usage, and access resources via the PixLab Console ↗.

- Community & Support Tickets: Reach out via our support portal → or open a ticket for technical queries.

- Enterprise Support: Dedicated assistance for enterprise clients through service agreements.

Visit the PixLab Console ↗ to explore more.

Customer Testimonials

What Our Users Say

Whether you're an indie developer, freelancer, or business, PixLab is designed to enhance your workflow.